Code Execution in Kestra’s AI Agents Powered by Judge0

AI agents enable new workflow orchestration patterns. With the release of Kestra 1.0, teams can now run autonomous AI tasks that combine large language models (LLMs), memory, and external tools to dynamically decide which steps to take to accomplish a given goal. Among the tools available to these agents is code execution, integrated through the Judge0.

Code execution tool lets AI Agents run LLM-generated code to perform mathematical calculations or analyze data in a safe orchestration environment.

#Why Code Execution Matters for AI Agents

LLMs excel at pattern recognition, explanation, and producing step-by-step solutions, but they are not built for executing precise deterministic calculations. An AI agent without tools may hallucinate numbers, miscount items in a log, or generate invalid cryptographic hashes.

In Kestra, adding the code execution tool gives agents a way to:

- Run exact mathematical or statistical computations

- Parse and aggregate structured data

- Perform simulations

- Execute and validate code in multiple programming languages

By combining LLM reasoning with Judge0 execution, Kestra agents can ensure deterministic results.

#Minimal Example

Here’s a minimal example where an AI Agent uses Judge0 to compute the square root of a number:

id: calculator_agent

namespace: company.ai

inputs:

- id: nr

type: INT

defaults: 1764

tasks:

- id: agent

type: io.kestra.plugin.ai.agent.AIAgent

provider:

type: io.kestra.plugin.ai.provider.GoogleGemini

apiKey: "{{kv('GEMINI_API_KEY')}}"

modelName: gemini-2.5-flash

prompt: What is the square root of {{inputs.nr}}?

tools:

- type: io.kestra.plugin.ai.tool.CodeExecution

apiKey: "{{kv('RAPID_API_KEY')}}"Without the CodeExecution tool, modern LLMs might still return the correct number. But with more complex use cases, e.g. a mortgage amortization calculation, relying on the LLM alone can produce an invalid output. Judge0 ensures the math is executed correctly.

#Expanding Beyond Math

Through CodeExecution, Kestra agents can handle tasks where LLMs alone often fail:

- Data transformation: parse logs, count events, or aggregate JSON

- Cryptography: compute real hashes like SHA-256

- Validation: run code snippets validating results before calling downstream services.

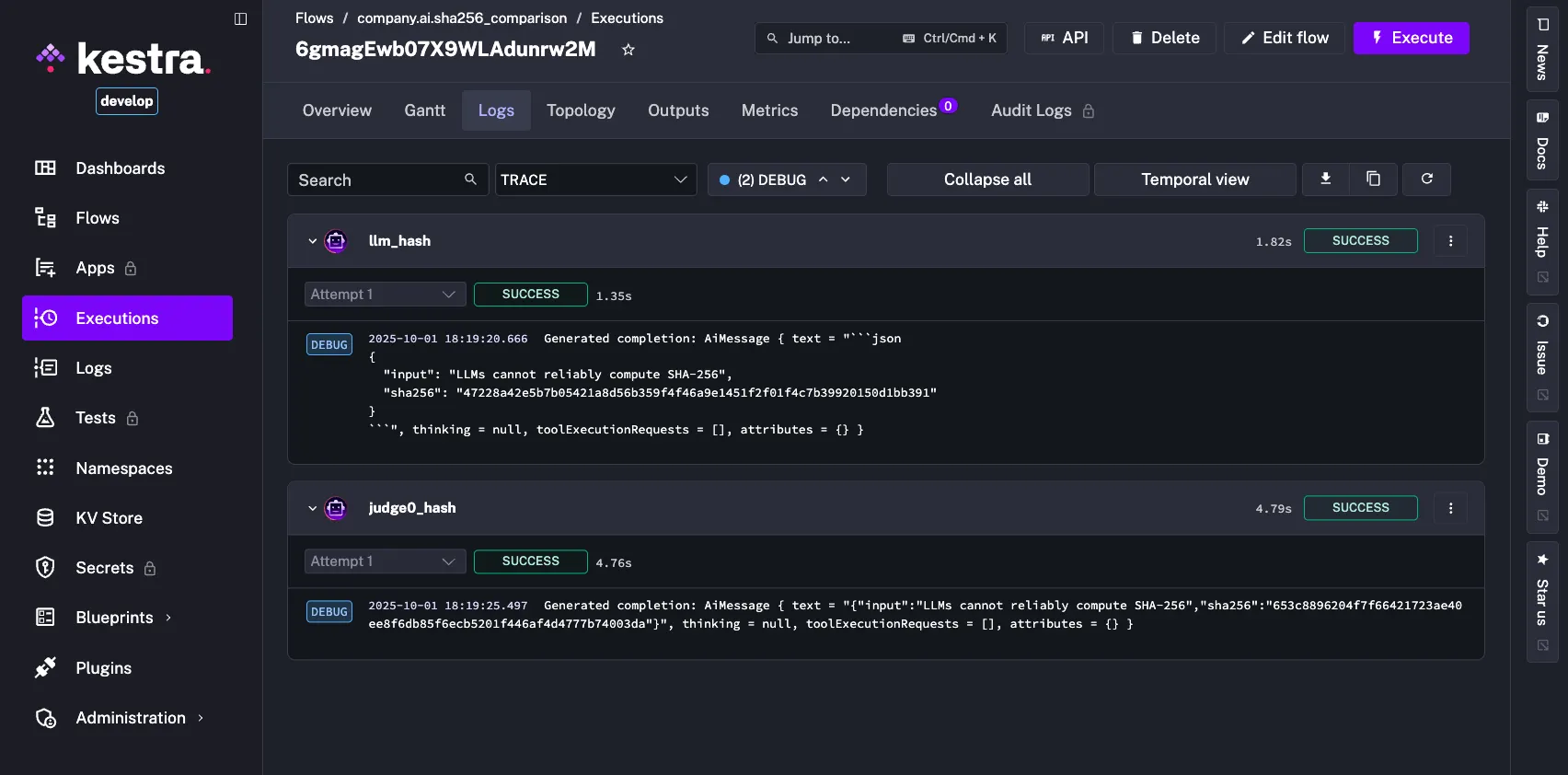

Take cryptography as an example. LLMs can describe how hashing works but cannot reliably produce the correct digest. Here’s a flow that compares an LLM-only attempt with a Judge0-powered execution:

id: sha256_comparison

namespace: company.ai

inputs:

- id: text

type: STRING

defaults: LLMs cannot reliably compute SHA-256

tasks:

- id: llm_hash

type: io.kestra.plugin.ai.agent.AIAgent

description: ❌ LLM-only attempt (likely to hallucinate a fake hash)

provider:

type: io.kestra.plugin.ai.provider.GoogleGemini

apiKey: "{{ kv('GEMINI_API_KEY') }}"

modelName: gemini-2.5-flash

systemMessage: |

Compute the SHA-256 hash.

Return only {"input": "<string>", "sha256": "<hash>"}.

prompt: Compute the SHA-256 hash of "{{ inputs.text }}"

- id: judge0_hash

type: io.kestra.plugin.ai.agent.AIAgent

description: ✅ Judge0-powered hash using Node's crypto (reliable)

provider:

type: io.kestra.plugin.ai.provider.GoogleGemini

apiKey: "{{ kv('GEMINI_API_KEY') }}"

modelName: gemini-2.5-flash

systemMessage: Always call the CodeExecution tool.

prompt: |

Compute the SHA-256 hash of "{{ inputs.text }}" by running code

using Node's `crypto` library.

Return only {"input": "<string>", "sha256": "<hex>"}.

tools:

- type: io.kestra.plugin.ai.tool.CodeExecution

apiKey: "{{ kv('RAPID_API_KEY') }}"Running this flow shows the difference immediately:

- LLM-only (

llm_hash): returns a plausible but incorrect 64-character hex string. - CodeExecution (

judge0_hash): executes real JavaScript with Node’scryptolibrary and produces a correct, deterministic hash every time.

This illustrates why code execution is an essential tool for Kestra’s agents.

#Declarative Orchestration with AI

AI Agents in Kestra are declarative: you describe what you want, and the agent figures out how to achieve it.

Code execution with Judge0 plays an important role in this setup: it ensures correctness, determinism, and reproducibility in AI workflows.

Together, they enable workflows that adapt in real time while remaining safe and observable.

#Get Started

The integration of Kestra AI Agents with Judge0 reflects a broader shift: orchestration systems are becoming a fusion of reasoning (LLMs) and execution (tools). As teams build more adaptive workflows, having access to both becomes critical.

👉 Try Kestra 1.0 with AI Agents: https://kestra.io/1-0↗︎

👉 Explore Judge0: https://judge0.com↗︎